Marketers looking to optimise their content have long relied onA/B tests to discover what works for their audiences. But this can only deliver real impact when you can trust the quality, accuracy, and diversity of the content being tested.

Goodbye to ‘blank page’ problems.

AI tools make it easier than ever to create multiple variations of text and images for A/B testing and multivariate testing. Marketers can experiment with different headlines, copy, visuals, calls-to-action, and other elements to determine what resonates best with their target audience.

And with generative AI tools like ChatGPT, the click of a button gives you as much content as you can handle. So AI gives you quantity, but what about quality?

Is your AI-generated content good?

‘Good’ can mean a few things:

- Is it factually accurate? Foundational models like ChatGPT can ‘hallucinate’, generating false information.

- Does your messaging have the right meaning? AI isn’t trained on your brand and messaging, so it doesn’t know what you really want.

- Is it on-brand? You need an AI solution that can capture your brand tone of voice and write in your brand’s style.

But there’s one factor that matters more than all others: Does it perform? Are you inspiring people to feel, think or act in the way you intended? After all, that’s the whole purpose of marketing language – chosen with care to stand out and engage with a specific audience.

The keys to high-performance content.

Many factors contribute to content performance. One important factor is language diversity and the use of different combinations of linguistic features such as words, phrases, emojis, sentiment, semantics, and syntactic structure.

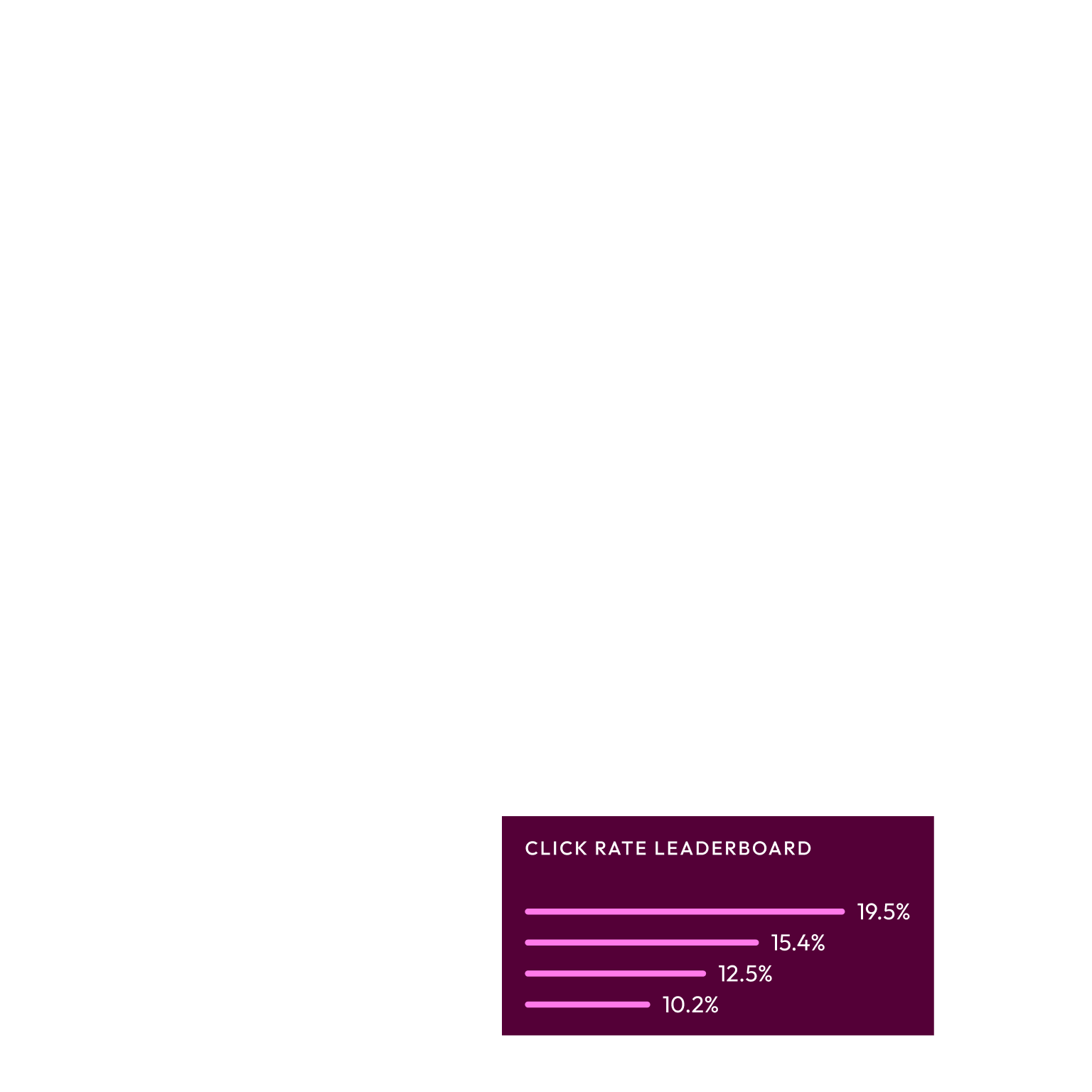

There is a positive correlation between language diversity and performance. On average, brands that experiment with diverse language in their subject lines enjoy an open rate uplift of 10% and a click rate uplift of 16%.

But there are millions of ways to write a subject line – and no single formula for success.

Want to know what resonates best with your audience? You need to leverage a wide range of different linguistic features to be tested in a variety of combinations. Looking at individual linguistic variables in isolation (e.g. length or emojis) does not tend to find a correlation with performance.

Humans are not good at writing linguistically diverse marketing messages at scale. With cognitive bias, risk-aversion, and preconceived notions about what will and won’t work, most of us will instinctively lean on language which has performed well in the past, things that sound good to our own ear, or what others have used.

And if we can’t remove bias in the content we create ourselves, we certainly can’t remove it from the content that we ask AI to generate.

Harnessing generative AI for marketing.

Now that creation has been democratised and you can create all kinds of content with far less effort, you need to then determine what’s good and what’s bad. What works and what doesn’t. And the only repeatable way of doing this is to use a data-driven model.

Jacquard’s proprietary AI content generation technology exceeds what publicly available large language models (LLMs) can produce on their own. Our Deep Learning model, trained on nearly a decade of content experiments, takes the guesswork out of creating engaging content for your audience – and helps you to drive sustained brand affinity.

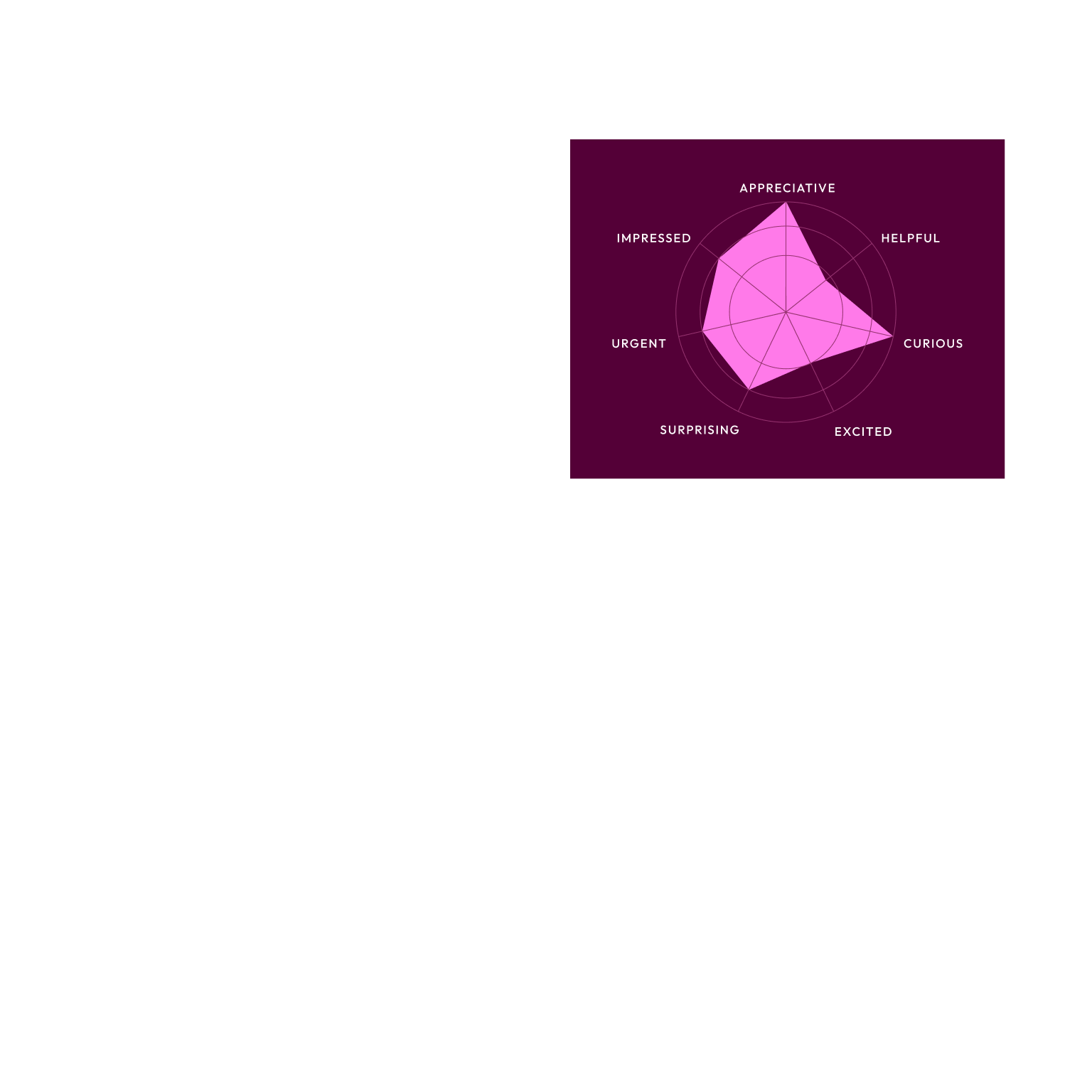

We do this by analysing your content experiments to reveal the words, emojis, and sentiments different audiences respond to most. You can track the performance of your optimisation tests, both at the individual A/B/n experiment level and at the account level across multiple experiments. And the platform will show you exactly how much additional revenue you’re driving through NewCo’s content AI.

When it comes to performance, it’s not good enough to just create more content. Truly great testing comes from language diversity.

Ready to transform your messaging?

Book a demo to see how our purpose-built tooling designed for scale can help your brand to resonate everywhere.